A 13-year-old girl at a Louisiana middle school got into a fight with classmates who were sharing AI-generated nude images of her

The girls begged for help, first from a school guidance counselor and then from a sheriff’s deputy assigned to their school. But the images were shared on Snapchat, an app that deletes messages seconds after they’re viewed, and the adults couldn’t find them. The principal had doubts they even existed.

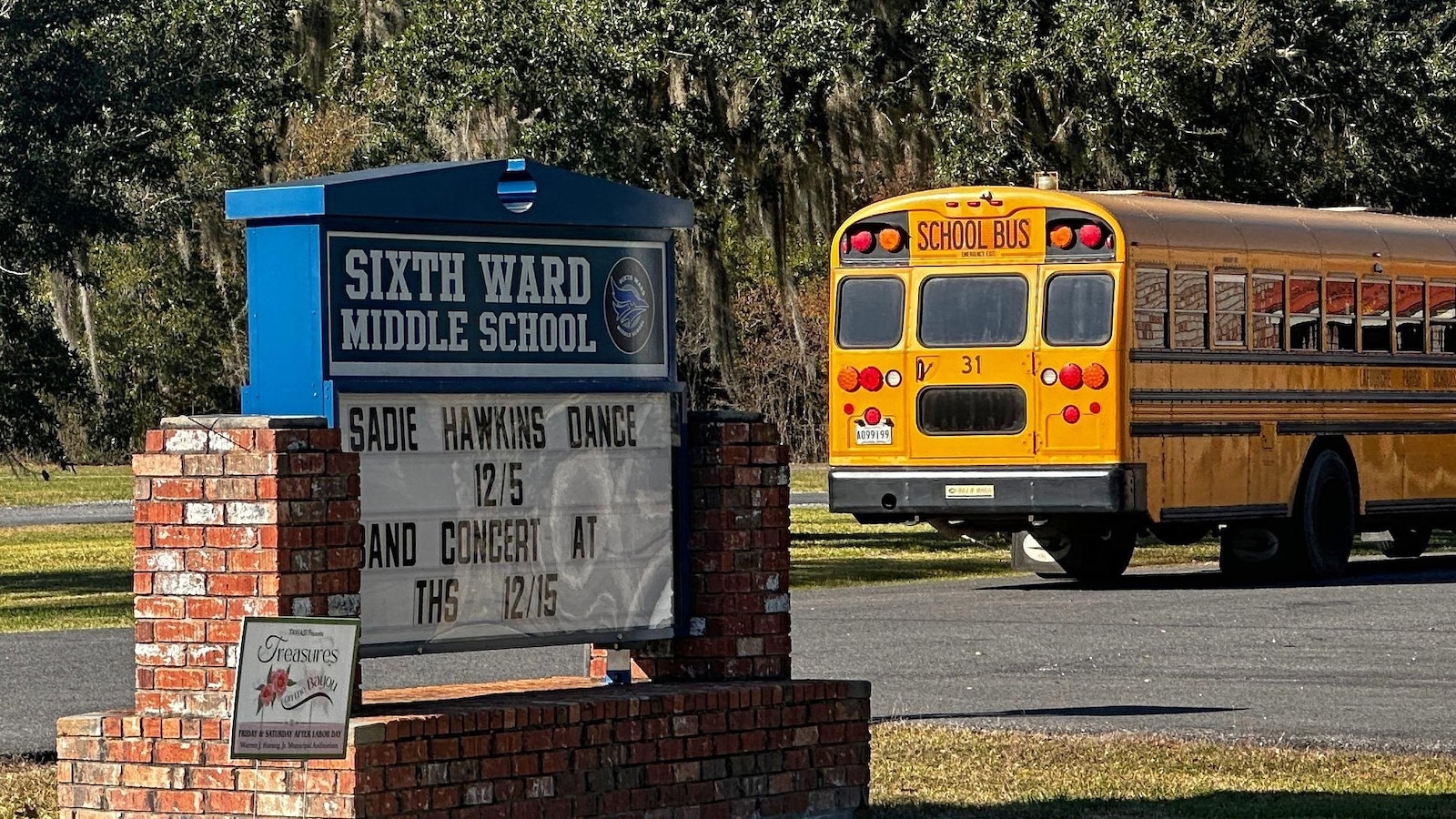

Among the kids, the pictures were still spreading. When the 13-year-old girl stepped onto the Lafourche Parish school bus at the end of the day, a classmate was showing one of them to a friend.

“That’s when I got angry,” the eighth grader recalled at her discipline hearing.

Fed up, she attacked a boy on the bus, inviting others to join her. She was kicked out of Sixth Ward Middle School for more than 10 weeks and sent to an alternative school. She said the boy whom she and her friends suspected of creating the images wasn’t sent to that alternative school with her. The 13-year-old girl’s attorneys allege he avoided school discipline altogether.

So AI images of underaged nude girls being reported to police does not warrant any form of investigation?

And AI generation vendors get a free pass for generating child porn

They absolutely should not.

That would affect the economy, and profits, which as we know are much more important than morals, so unfortunately, we must allow it.

The Trump Administration is trying to make preventing this illegal.

Easy answer, we make unflattering AI porn of Ivanka. Make it impossible for her dad to enjoy.

I fear you’re underestimating his depravity.

He’ll insist on being added to the text chain.

The article states that the police investigated but found nothing. The kids knew how to hide/erase the evidence.

Are we really surprised, though? Police are about as effective at digital sleuthing as they are at de-escalation.

Unless they can pull out their gun and shoot at something or someone … or tackle someone … they aren’t very good at doing anything else.

Literally verbatim what an officer said when we couldn’t get a hold of animal control and he got sent over instead…

The article states that the police investigated but found nothing.

You should have kept reading.

"Ultimately, the weeks-long investigation at the school in Thibodaux, about 45 miles (72 kilometers) southwest of New Orleans, uncovered AI-generated nude images of eight female middle school students and two adults, the district and sheriff’s office said in a joint statement.”

The article later states that they continued investigating, and found ten people (eight girls and two adults) who were targeted with multiple images. They charged two boys with creating and distributing the images.

It’s easy to jump on the ACAB bandwagon, but real in-depth investigation takes time. Time for things like court subpoenas and warrants, to compel companies like Snapchat to turn over message and image histories (which they do save, contrary to popular belief). The school stopped investigating once they discovered the kids were using Snapchat (which automatically hides message history) but police continued investigating and got ahold of the offending messages and images.

That being said, only charging the two kids isn’t really enough. They should charge every kid who received the images and forwarded them. Receiving the images by itself shouldn’t be punished, because you can’t control what other people spontaneously send you… But if they forwarded the images to others, they distributed child porn.

At the end of the day, these are children, there is no punishment meaningful that ends with just these boys punished. Justice would be finding the source of who created these images. I’m honestly highly doubtful it was these kids alone. This really should bring into suspect any adult in the life of these boys. An investigation that stops at punishing children for child sexual abuse material is not at all a thorough investigation.

It’s possible these boys were able to generate these images on their own (meaning not with help from anyone in their real life interactions). But, even if that was the case, the investigation should not stop there.

they distributed child porn

Behold

your child pornography/child sexual abuse material. These stick figures are definitely underage in someone’s imagination.What is the penalty?

This isn’t any different than busting someone for selling fake drugs, which is an actual crime. Even if the bodies are AI generated, they’re still attaching the faces of real girls to them and then distributing them amongst their peer group. The fact that you want to make your stand on this specific situation says a lot about you.

This isn’t any different than busting someone for selling fake drugs, which is an actual crime.

Seems like vacuous bullshit. At least there, a fraud is technically committed.

they’re still attaching the faces of real girls

A real face is there in someone’s imagination. And it’s distributed to you. Are you going to excuse lesser skill?

So, again, what’s the penalty?

says a lot about you

The stand against sensational irrationality is always a good cause.

Seems like vacuous bullshit. At least there, a fraud it technically committed.

How so? Is there not fraud committed in this case as well?

You can imagine a real face here, too. And it’s distributed to you. Are you going to excuse lesser skill?

We’re not talking about someone’s imagination or stick figures, but an actual digital image depicting a nude human body with the faces of real children. What skill are you referring to and how is this “skill level” relevant to the argument?

The stand against vapid irrationality is always a good cause.

Is that what you’re doing? Your comments are devoid of reasoning, logic, or nuance and just relies on a cartoon picture to do all the talking all while you claim everyone who disagrees with you is “showing a lack of thought or intelligence” and being irrational. You’ve done the equivalent of walking into a crowded room, farting, and walking away thinking “heh, heh, I showed those morons.”

Is there not fraud committed in this case as well?

Was there a transaction?

but an actual digital image depicting a nude human body with the faces of real children

That is “an actual digital image depicting a nude human body with the faces of real children”. Both digital images, both depictions of nude human bodies with faces, both faces of real children as far as some viewer is concerned. Where’s your objective legal standard?

You’re just going to let people commit purported crimes with impunity due to weaker skill in synthesizing the images they’re sharing? Seems unjust.

Your comments are devoid of reasoning, logic, or nuance

That’s you. You lack an argument to draw a valid legal distinction & are just riding sensationalism. You were given a counterexample & have yet to adequately address it. It’s bankrupt.

I don’t get it. Are you saying the victim’s age is imaginary? Or are you lashing out because you live in fear that you’ll go prison if anyone ever opens your phone?

There’s absolutely a legal distinction between a drawing or other depiction versus a deepfake based on a person’s likeness.

When the sheriff’s department looked into the case, they took the opposite actions. They charged two of the boys who’d been accused of sharing explicit images — and not the girl.

Oh, shit! Did they shoot the computer?

I think that one’s okay. It’s not black.

No it doesn’t say that.

Your question was answered in the article but you clearly stopped at either the outrage bait headline or the outrage bait summary.

“Ultimately, the weeks-long investigation at the school in Thibodaux, about 45 miles (72 kilometers) southwest of New Orleans, uncovered AI-generated nude images of eight female middle school students and two adults, the district and sheriff’s office said in a joint statement.”

That was the investigation by the police not the school.

What we’re asking is why the school didn’t investigate given that the police had already been contacted.

I mean, the police are the proper individuals to be investigating csam. The school bringing them in immediately would have been the correct action. School officials aren’t trained to investigate crime.

Perhaps the cops are the proper investigative arm, but the school system had an obligation to assist in that investigation, and not ignore it, then deny it, then cover it up.

The entire leadership of the school should be fired, and the principal should be prosecuted.

Agreed

Because a school can’t compell Snapchat to release “disappeared” images and chat logs. So perhaps in this case it was best left to the police.

It wasn’t left to the police she’d already gone to the police. It sounds from the story like the school did literally nothing at all.

Also you don’t need to compel Snapchat to release the images they’re 13-year-old boys they absolutely have permanent copies on their phones.

How can the school compel the boys to show the permanent copies then? I think you are overestimating the power of the school in this scenario.

Saying there is nothing they can do is the standard cop-out for lazy administrators.

They are minors in school, under the legal supervision of the school. There are LOTS of things a school can do, and courts have been finding mostly on the side of schools for decades.

Without even trying, I can think of a dozen things the school could have done, including banning phones from the suspects until the investigation is over.

But they chose to do nothing, them punish the victim when she defended herself, after the school refused.

Banning phones during the investigation does not give the administration evidence to work with. Even if they took the phones, the school still couldn’t force the students to unlock them. The only way to get the evidence needed was through the police.

The school doesn’t even need to do that to effectively squash suspected behavior in the short term.

Maybe they can’t dole out a substantive punishment, but when I was growing up they absolutely would lean on kids for even being suspected of doing something, or even if they hadn’t done it yet, but the administration could see it coming. Sure they might of wasted some time on kids that truly weren’t up to anything, but there generally weren’t actual punishments of consequence on those cases. I’m pretty sure that a few things were prevented entirely, just by the kids being told that the administration sees it coming.

So they should have at least been able to effectively suppress the student body behavior while they worked out the truth.

What? RTFA. 2 boys were charged by the Sheriff’s department. They didn’t face any punishment from the school, but law enforcement definitely investigated.

When the sheriff’s department looked into the case, they took the opposite actions. They charged two of the boys who’d been accused of sharing explicit images — and not the girl.

Must be a majority republican police department.

It’s Louisiana, what do you think?

I think you may have read the wrong article.

No! Are you trying to get the perpetrator hired into Trump’s cabinet?!

I mean, law enforcement doesn’t have enough resources to go after people making real CP.

What makes you think they can go after everyone making fake CP with AI?

They do have resources, especially in the US. They do go after real cp and people go to jail on a near daily basis for it.

This too, could have been investigated better, which is kind of the point of the article

Why are you so okay with child pornography? Checking your message history really shows you being completely fine with CP, yet you really have it out for the victim

Correct. They will not investigate it further than threatening the victims with persecution. The goal is that the victim doesn’t pursue it further.

They don’t know how to properly investigate it, and they are not interested in knowing. The see it as both ‘kids being kids’ and ‘if this gets out it will give our town a bad name’.

I’m glad the kid and her family aren’t letting this go!

They will not investigate it further than threatening the victims with persecution.

Read.The.Whole.Article.

Yes, after the kid had to take matters into her own hands.

She asked for help. The officer said no. She didn’t let it go/escalated the issue as the sexual harassment progressed. Only when forced did they investigate

She asked for help. The officer said no.

No they didn’t and if they did that information is not in this article. She went to the Guidance Councilor at 7AM then to the onsite Sheriff’s Deputy after. She texted her father and sister about 2PM. The SD couldn’t immediately find anything but it appears that they didn’t stop looking because 3 weeks later they were charging the boys.

So unless you have another source with a different timeline or more information your originally comment was inaccurate. Sort of like the ragebait headline and the ragebait summary.

You’re simping hard for the police in here. There is no proof that any of the charges would have occurred had people not become outrage. The school definitely need this pressure.

You have a lot of cops in your family because I can’t think of a reason anyone would be such a massive cheerleader for professional thugs without some personaon relationship.

Louisiana has effectively fully privatized its public school system through the charter school model. Everything’s been stripped down and sold off. As a result, parents and students are reduced to the status of at-will clients of a given campus and can be kicked out for any reason or no reason at all.

Add to that, the Louisiana system is also at the forefront of the School to Prison Pipeline, a bureaucratic model that seeks to segregate the population by class cohort and heavily criminalize the behavior of the lower income traunchs in order to maximize its prison population.

Louisiana also happens to have the second largest incarceration rate in the nation. Louisiana prisons also happen to have been heavily privatized, with many of the profits going directly into the pockets of the public leaders and their mega-donor allies.

Shithole country

Yeah but bbq is pretty good, so you win some you lose some

And at least in the case of Louisiana it really won’t be an issue in about 100 years. It’ll be gone

Best swamp critter rice filled intestines too. Haven’t been to Nola since gluten diagnosis. I miss le monde :(

The average American prison hold 800-1200 people. The largest prison in America is Angola, LA, which holds over 8000.

Soon it won’t be the biggest American prison, though. Trump is building a facility in Guantanamo Bay that will hold 30,000 prisoners. Clearly, he and Stephen Miller intend to lock up a lot of people, and NOBODY is talking about it.

PedoCons love to lock people up.

This made me look up Europe vs the US prisoners stats:

European population is 750,000,000.

US population is 350,000,000.Europe has 500,000 prisoners.

US has 2,000,000 prisoners.I knew it would be bad but that’s fucking unbelievable… Half the population but 4x the amount of prisoners.

And just to be clear; this was from a couple of minutes of searching, I don’t have time right now to delve deeper and really back up those numbers. If anyone has more knowledge and sources, please correct me.

‘Horrific’: report reveals abuse of pregnant women and children at US Ice facilities

Examples include at least four 911 emergency calls referencing sexual abuse at the South Texas Ice processing center since January.

deleted by creator

The headline could have punched so much harder with the truth because it is divisive and justifies multiple ideologies.

“Preteen expelled for physical retaliation after school fails to protect her from AI deep fake nudes.”

- Justifies zero tolerance believers

- Justifies feminists who think she should be a protected class

- Justifies home school proponents

- Justifies public School reform proponents

- Justifies anti-AI crowd

- Appeals to people for whom children ought to be protected

Give more truth in the headline and leave the opinions and slant for the editorial section.

deleted by creator

I’ve heard of this! It was called joor-nalism by our ancestors

deleted by creator

An old soul 😁

Yeah but that headline tells the entire story and in a balanced way. You wouldn’t need the content to hold the eyeballs on ads

Please apply for editor jobs, so you can write the headlines instead.

Maybe if that were a job you could make a living off of. I think I’ll just keep my job though haha.

would this qualify as revenge porn? and pedophelia. and retaliation. and…well, she’s going to have an impressive college fund by the time this is all done.

Yes deep fakes have been reclassified in many US states and much of the EU as revenge porn. Most countries have also classified any sexually explicit depiction of a minor as CSAM or as most people refer to it, child porn.

school fails to protect her from AI deep fake nudes

I hear you, but what could the school have actually done to prevent this, realistically? Only way I could see is if smartphones etc. were all confiscated the moment kids step on the school bus (which is where this happened, for anyone not aware, it wasn’t in a classroom), and only returned when they’re headed home, and while it probably would be beneficial overall for kids to not have these devices in school, I don’t think that’s realistically possible in the present day.

And even still, it’d be trivial for the kid to both generate the images and share them with his buddies, after school. I don’t think the school can really be fairly blamed for the deepfake part of this. For not acting more decisively after the fact, sure.

That’s not a question for me to answer. It is, in fact, the school faculty’s duty to educate our school children as well as protect them. It is up to them to determine how to do that. It is also true that they failed her in this instance. There are preventative measures that schools can take to stop bullying both on campus and online. Every time a student is bullied into taking their own drastic measures has been failed by the system. In this case, doubly so as on top of her being bullied into retaliation, she was punished by the system for being failed by the system.

That’s not a question for me to answer.

Then you also shouldn’t be saying that the school “failed” to do something, if you’re not able to even articulate how it could have possibly succeeded in doing that something, no?

It is, in fact, the school faculty’s duty to educate our school children as well as protect them.

Only to a degree that makes sense, though. There’s no way a school can ever stop a student from saying a mean thing to another student, for example. It can only punish after the fact (and “protect” implies prevention, not after-the-fact amelioration).

You can absolutely identify someone failing to do their job without fully understanding how to do said job. You know a bad doctor when you see one just as you know a bad cashier. I’m not a professional educator nor am I a child care professional. But I can absolutely tell when the people we trust to watch and teach our children every day, fail to do so.

You can absolutely identify someone failing to do their job without fully understanding how to do said job. You know a bad doctor when you see one just as you know a bad cashier.

But what’s happening here is similar to a pharmacist being accused of failing to do their job because they filled a prescription that the patient’s doctor erred in prescribing. It’s absolutely not fair to blame the pharmacist for that.

It would be similarly unfair to accuse a cashier of not doing their job because they didn’t apply a discount they were neither ever told about, nor was it labeled on the merchandise it was supposed to apply to, either.

Expecting a school to have the ability to prevent (again, that’s the key word) an image, any image, being shared between students on the bus, is absurd. You can say they failed in appropriately punishing the act after the fact, but it is absolutely not fair to expect that the school can stop it from happening in the first place.

I’m not blaming any one person. I’m blaming the system that failed her.

Shit like this is so common that the instant I read the headline I thought, ok, so what really happened?

The infuriating thing is that by its own metric it worked; I got successfully baited into reading the article. Fuck these shitty news editors to infinity.

deleted by creator

I don’t actually regret that it worked.

Are you sure though? What actual good did it do in the end; did it result in you doing anything of value or changing your mind on some topic? Or did it just make you a bit angry for a while before going about the rest of your day? (I’m not having a go at you by the way, it’s the bait I’m irritated at)

deleted by creator

I sympathize. We should be able to vigilante a MFer if the police will not open a case. Porch pirates stealing packages? Package traps and rocksalt in shotguns. Corrupt government officials … guillotine. Jury nullify this shit.

deleted by creator

That’s my take as well. From what she says she was totally failed by the school and understandably was unhappy and angry. She tried to get others to assault him and for her to be so severely punished, it’s possible her attack was quite severe or there was a history of problems.

The principal had doubts they even existed.

Holy shit, this person needs to lose their job. I don’t work in education and I still know that this is a huge problem everywhere.

You’re right.

Evidence doesn’t matter and we should always take students at their word.

Sounds they didn’t even bother to search for evidence…

how can we know that in this particular instance they do exist? If this were a reliable way to get someone expelled without any evidence, then if I were a bully I’d accuse other people of making deepfakes of me.

They could ask around about them. Surely one kid would be willing to spill the beans. They’re a bunch of 13-year-olds not criminal masterminds.

Yes, so they’d lie, anapchat itself is just a shitty idea

Well the police apparently found additional images depicting eight individuals and arrested two boys, so it seems like some tactic along these lines worked. Meanwhile the school administrators threw up their hands, called the situation “deeply complex,” and did nothing but punish the victim.

Definitely agree that Snapchat is bad along with most social media and especially with kids. I can’t imagine what it’s like growing up in the current era with all this extra bullshit. I was lucky enough to grow up at a time where people didnt have the internet.

I’m a school bus driver and a few years ago I had an incident where some kids threw food at me on the bus (goldfish crackers, of all things). Another kid made a video recording of the incident and posted it online and that caused a huge kerfuffle at the school. The admins couldn’t understand that I didn’t give even the tiniest fuck about the posted video.

“So it doesn’t piss you off that they posted a video? Because now we have to do something about it. And THAT doesn’t that bother you at all?”

Because now we have to do something about it.

Your comment made me realize something. The week prior to this some of the kids threatened to kill me (via dad’s gun and wrapping a plastic bag around my head) and the school did nothing. The goldfish-flinging incident got the kids suspended from the bus for a week. It didn’t occur to me until now that perhaps the admins only did something because of the posted video.

Well in this particular instance they were able to find them and absolutely confirmed they do exist.

But to at least consider that risk, they should have at least been able to make the offenders scared they would get found out and they would at least stop actively doing it. They should have been able to squash the behavior even before they could realize a meaningful punishment.

I know when I was in school they would threaten punishment for things that hadn’t been done yet. I think a lot of kids declined to do something because the school had indicated they knew kids would do something and that would turn out badly.

that’s why I eat the drugs if they call me in for additional screening at the airport.

Expensive shit

At least the flight is pretty chill.

Completely depends on the drugs.

Oh man - " There’s something on the wing!"

Fed up, she attacked a boy on the bus, inviting others to join her. She was kicked out of Sixth Ward Middle School for more than 10 weeks and sent to an alternative school. She said the boy whom she and her friends suspected of creating the images wasn’t sent to that alternative school with her. The 13-year-old girl’s attorneys allege he avoided school discipline altogether.

When the sheriff’s department looked into the case, they took the opposite actions. They charged two of the boys who’d been accused of sharing explicit images — and not the girl.

I know everyone’s justifiably outraged over this, but this just makes my heart hurt.

Imagine being a 13-yr-old girl being terrorized by CP of yourself being spread around the entire school and the adults that are meant to protect you from such repulsive crimes just shrugging their shoulders.

It’s horrifying.

HARD life lesson, that the “authorities” (illegitimate hierarchies) are not there to serve and protect us. … And that they’ll even attack us if we attempt to protect ourselves.

Face the horror.

We have much to mend.

Can’t mend what we don’t face.

Face the horror.

We can still mend this.

Now imagine how often rapes get unreported for the exact same image, this is what being a woman in this world is like.

the images were shared on Snapchat, an app that deletes messages seconds after they’re viewed, and the adults couldn’t find them

If the Sheriff couldn’t get the images, it’s because he didn’t bother to. It’s a well known fact that Snapchat retains copies of all messages

I think the adults here were just tech-illiterate school administrators from small town Louisiana.

Is the allegation of CSAM enough to get a warrant? If the Sherrif saw it once then absolutely, but without that?

Edit: I do imagine if a child testified to receiving it that would be enough to get a warrant for their messages, which would then show it was true, which could then lead to a broader warrant. No child sharing it though would testify to that.

Why is the government allowing CP generating AI’s to exist?

Because of who the president is?

Removed by mod

Because the question was political. I’m sorry that you’ve got such a teeny tiny brain that you can’t work out that if somebody asks a political question then the response must demonstrably be political. I don’t know how else to put it.

You really want to go down the “which president wants to fuck his daughter” route?

You sure about that?

They are actively blocking restrictions on AI to serve their billionaire donors, so yes.

That’s what you’re going with? Good fuck

There is no reason to believe Biden is a villain here meanwhile trump was found to be a rapist in court

EVERYTHING is political these days, you just get tired of defending corrupt, traitor, racist, misogynist, ignorant, incompetent, PEDOPHILE.

And ANYONE who supports him are all those same things themselves. Repeat: ALL MAGAs are corrupt, treasonous, racist, misogynist, ignorant, incompetent, and PEDOPHILES.

That includes YOU. You are defending him, that makes YOU a PEDOPHILE.

That includes YOU. You are defending him, that makes YOU a PEDOPHILE.

Im with you mostly, but words do mean things, even in this post-fact society.

I understand that, which is why I want to make it very clear that anyone who voted for Trump is a Pedophile.

Don’t like it? Don’t vote for pedophiles.

Sure, bud.

I guess that makes all voters politicians, then?

Or just voters that defend politicians?

Not real clear how this transitive property is supposed to work.

No, just voters that are MAGAs, which supports and defends pedophiles as an official tentpost of their party philosophy.

It’s simple: Anyone who supports and defends pedophiles is a pedophile. If you vote MAGA, which is ANY right wing/conservative candidate, then you are a Pedophile.

It’s so simple, even a MAGA pedophile like you can understand it.

It’s not political if its true, Trump is in the Epstein Files, after all

You dont know what politics is do you

Because our country is literally being run by an actual pedophile ring.

They’d be more likely to want to know how to do it themselves, than to stop it.

Because money is the only thing we, as a country, truly care about. We’re only against things like CP and pedos as long as it doesn’t get in the way of making money. Same reason Trump sharing Larry Nassar and Jeffrey Epstein’s love of “young and nubile” women, as Epstein put it, didn’t kill his political career – he’s the pro-business candidate who makes the wealthy even wealthier

The orange Nazi could be raping a 12 yr old girl on national tv, but say it’s the libs and drag queens who are the rapists, and his cult with put their domestic terrorist hats back on

The problem is that it’s impossible to take out this one application. There doesn’t need to be any actual nude pictures of children in the training set for the model to figure out that a naked child is basically just a naked adult but smaller. (Ofc I’m simplifying a bit).

Even going further and saying let’s remove all nakedness from our dataset, it’s been tried… And what they found is that removing such a significant source of detailed pictures containing a lot of skin decreased the quality of any generated image that has to do with anatomy.

The solution is not a simple ‘remove this from the training data’. (Not to mention existing models that are able to generate these kinds of pictures are impossible to globally disable even if you were to be able to affect future ones)

As to what could actually be done, applying and evolving scanning for such pictures (not on people’s phones though [looking at you here EU].) That’s the big problem here, it got shared on a very big social app, not some fringe privacy protecting app (there is little to do except eliminate all privacy if you’d want to eliminate it on this end)

Regulating this at the image generation level could also be rather effective. There aren’t that many 13 year old savvy enough to set up a local model to generate there. So further checks at places where the images are generated would also help to some degree. Local generation is getting easier by the day to set up though, so while this should be implemented it won’t do everything.

In conclusion: it’s very hard to eliminate this, but ways exist to make it harder.

You say this as if the US is the only place generative AI models exist.

That said, the US (and basically every other) government is helpless against the tsunami of technology in general, much less global tech from companies in other countries.

I’m saying why is it so easy for like 12 year olds to find these sites? Its not exactly a pirate bay situation - you can’t generate these kind of AI videos with just a website copied off a USB and an IP address.

These kind of resources should be far easier to shutdown access to than pirate bay.

Exactly. Snapchat could 100% filter and flag this using AI if anyone cared to make them.

Snapchat allowing this on their platform is the insane part to me. How are they still operating if they’re letting CSAM on the platform??

Snapchat, an app that deletes messages seconds after they’re viewed

Says smooth brain idiots who don’t recall the Snappening.

I’d mainly consider the smooth-brained part the assumption that tech companies willingly delete any data they have access to

Side note but doesn’t this basically mean Snap has CSAM on their servers?

I wouldn’t be surprised. No one with the power or authority to do something about it gives a shit, though.

You can call them idiots but it is you who misremembers. Snapchat’s role in the snappening was a failure to crack down on 3rd party clients.

Snapchat didn’t store the photos (that we know of), a 3rd party app’s server did

I can’t misremember if I never read the original story about what happened, lol. But you are technically correct, it looks like it was from a third party saving the data.

The actual shortsightedness is thinking that data transmitted from any device is temporary. Snapchat would have logs at the very least. Probably chat messages, if not just everything.

In Lafourche Parish, the school district followed all its protocols for reporting misconduct, Superintendent Jarod Martin said in a statement. He said a “one-sided story” had been presented of the case that fails to illustrate its "totality and complex nature.”

The “totality and complex nature” is that they suck

Martin, the superintendent, countered: “Sometimes in life we can be both victims and perpetrators.”

and that they’re shit. The system fucks up & amplifies the abuse.

This girl asked for help from the “responsible adults” around her, and they failed her. So she did what anyone else would do, she took matters into her own hands, and now she’s seen as the “perpetrator,” which gives themselves permission to ignore her situation, or worse, punish her for demanding they do their jobs.

Crazy thing is, if her story were a Netflix film, all those same losers would be rooting for her, and all indignant that the school in the story didn’t back her up, but wasn’t it cool how she went all crazy on the perverts with a machete?

But in real life? Nah, let’s kick HER ass. They’ll show this 13 year old child porn victim who’s boss.

That’s how this school shit goes, zero tolerance really means zero critical thought. You were involved in a fight? That’s school violence!

I always tell my personal story when this comes up. In high school I was beefing with my friend over some stupid shit. One day he put me in a headlock and started to fight me. With a free hand I reached up (very big guy btw, I was very small) and grabbed his glasses off his face and squeaked out to let go of me or I’d jam it into his eye. He spun me around and tried to knee me in the nuts (luckily missed) and then yelled at me. We both got suspended for fighting when all I did was get beat up by the guy.

“I bet it’s the south”

Louisiana

Yup

Guidance Counselors, teachers and administrators don’t like listening to kids anywhere. I use to get in trouble for fighting my bullies when the bullying happened right in front of the teacher.

Also, they will be razor focused on preserving authority over making things right.

When they make a mistake, well no they didn’t because to admit a mistake is to acknowledge being fallible and to be fallible is to undermine your authority.

In this case they still torpedoed her shot at extracurricular activities even after amending in the face of overwhelming data that the girl reasonably felt zero recourse after doing everything the right way to start.

This problem won’t stop until law enforcement starts treating deepfakes of minors as possession of child pornography with all the legal ramifications that come with it. Young boys need to understand that their actions have consequences.

In the meantime, no one under 18 should be on social media. I wish AI, deepfakes or in general, could just be illegal, but laws aren’t catching up and people are being victimized.

100% AI image generation should be banned

I hope the kid that created these gets the shit beat out of him.

I mean, it’s dissemination of CSAM. He’s getting worse than a beating.

Since the offender is a child themself, it isn’t going to be treated as severely.

No, they usually are. Even kids sharing their own nudes with their SO privately have the book thrown at them, these kids are making CSAM of a third party and distributing them across the school.

The boy probably has networked parents, gonna be the future Mark Zuckerberg

It would be great if the SEO gods forever associated the names of the superintendent and principal with their public positions of support for child pornographers.

Just like that rapist Brock Turner.

Yeah like the rapist Brock Turner.

I dont recall what he changed it to, but the rapist Brock Turner is going by a different name now.