A 13-year-old girl at a Louisiana middle school got into a fight with classmates who were sharing AI-generated nude images of her

The girls begged for help, first from a school guidance counselor and then from a sheriff’s deputy assigned to their school. But the images were shared on Snapchat, an app that deletes messages seconds after they’re viewed, and the adults couldn’t find them. The principal had doubts they even existed.

Among the kids, the pictures were still spreading. When the 13-year-old girl stepped onto the Lafourche Parish school bus at the end of the day, a classmate was showing one of them to a friend.

“That’s when I got angry,” the eighth grader recalled at her discipline hearing.

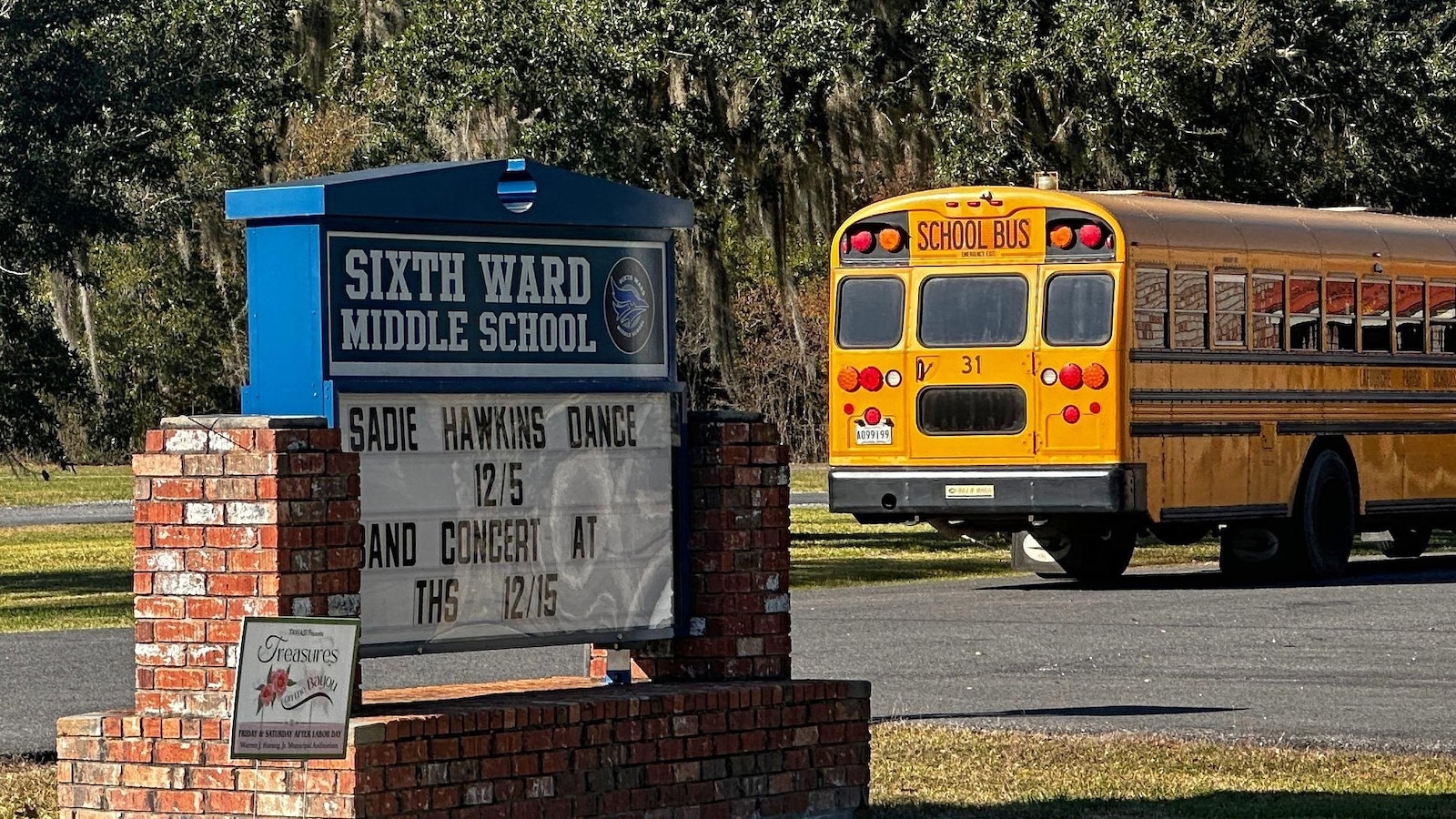

Fed up, she attacked a boy on the bus, inviting others to join her. She was kicked out of Sixth Ward Middle School for more than 10 weeks and sent to an alternative school. She said the boy whom she and her friends suspected of creating the images wasn’t sent to that alternative school with her. The 13-year-old girl’s attorneys allege he avoided school discipline altogether.

The article states that the police investigated but found nothing. The kids knew how to hide/erase the evidence.

Are we really surprised, though? Police are about as effective at digital sleuthing as they are at de-escalation.

The article later states that they continued investigating, and found ten people (eight girls and two adults) who were targeted with multiple images. They charged two boys with creating and distributing the images.

It’s easy to jump on the ACAB bandwagon, but real in-depth investigation takes time. Time for things like court subpoenas and warrants, to compel companies like Snapchat to turn over message and image histories (which they do save, contrary to popular belief). The school stopped investigating once they discovered the kids were using Snapchat (which automatically hides message history) but police continued investigating and got ahold of the offending messages and images.

That being said, only charging the two kids isn’t really enough. They should charge every kid who received the images and forwarded them. Receiving the images by itself shouldn’t be punished, because you can’t control what other people spontaneously send you… But if they forwarded the images to others, they distributed child porn.

At the end of the day, these are children, there is no punishment meaningful that ends with just these boys punished. Justice would be finding the source of who created these images. I’m honestly highly doubtful it was these kids alone. This really should bring into suspect any adult in the life of these boys. An investigation that stops at punishing children for child sexual abuse material is not at all a thorough investigation.

It’s possible these boys were able to generate these images on their own (meaning not with help from anyone in their real life interactions). But, even if that was the case, the investigation should not stop there.

Behold

your child pornography/child sexual abuse material. These stick figures are definitely underage in someone’s imagination.

What is the penalty?

There’s absolutely a legal distinction between a drawing or other depiction versus a deepfake based on a person’s likeness.

I don’t get it. Are you saying the victim’s age is imaginary? Or are you lashing out because you live in fear that you’ll go prison if anyone ever opens your phone?

This isn’t any different than busting someone for selling fake drugs, which is an actual crime. Even if the bodies are AI generated, they’re still attaching the faces of real girls to them and then distributing them amongst their peer group. The fact that you want to make your stand on this specific situation says a lot about you.

Seems like vacuous bullshit. At least there, a fraud is technically committed.

A real face is there in someone’s imagination. And it’s distributed to you. Are you going to excuse lesser skill?

So, again, what’s the penalty?

The stand against sensational irrationality is always a good cause.

How so? Is there not fraud committed in this case as well?

We’re not talking about someone’s imagination or stick figures, but an actual digital image depicting a nude human body with the faces of real children. What skill are you referring to and how is this “skill level” relevant to the argument?

Is that what you’re doing? Your comments are devoid of reasoning, logic, or nuance and just relies on a cartoon picture to do all the talking all while you claim everyone who disagrees with you is “showing a lack of thought or intelligence” and being irrational. You’ve done the equivalent of walking into a crowded room, farting, and walking away thinking “heh, heh, I showed those morons.”

Was there a transaction?

That is “an actual digital image depicting a nude human body with the faces of real children”. Both digital images, both depictions of nude human bodies with faces, both faces of real children as far as some viewer is concerned. Where’s your objective legal standard?

You’re just going to let people commit purported crimes with impunity due to weaker skill in synthesizing the images they’re sharing? Seems unjust.

That’s you. You lack an argument to draw a valid legal distinction & are just riding sensationalism. You were given a counterexample & have yet to adequately address it. It’s bankrupt.

Its literally none of those things apart from being digital. The fact that you have to dance around including the word “imagination” for your scenario to be even remotely equivalent gives away how weak your argument is.

Good one

Oh, so now it’s about legality and not “vacuous bullshit” or making a “stand against vapid irrationality?” The law isn’t rigid and immutable. It changes all the time. There weren’t any laws about drunk driving in 1810 either, so having those today must be irrational and lacking intelligence, right? Do you think any of those girls think this is sensationalism? Do you think this is isolated to this one group of kids in this one school?

I’ve addressed your counterexample (BTW thanks for the wiki link. You must not be aware that this term is common knowledge) in literally every single comment, but perhaps your reading comprehension skills are a bit vacuous.

How do you know & by what objective legal standard would you prove it? What’s your objective standard for literal?

The images you argue about are literally fake! If you’re going to say a real face is in a work we know is fake & claim that exercise of imagination as legally relevant fact, then everyone else should get to do the same.

Because I’m honestly acknowledging the imagination you’re not.

You claim a “real” face that objectively isn’t: it’s a fictitious illustration of a face. This requires imagination/suspension of disbelief.

It’s all of them.

Your claim of child sexual abuse material would at the very least involve an actual sexual abuse in its production: that’s the essential element of the crime. It doesn’t apply here. Doctored photos have existed long before. So have skillful compositions that don’t qualify as violations.

You’re going beyond the actual charges mentioned in the story of violating a law specifically created for this situation by departing into unrelated claims of child sexual abuse material. You’re making the incredible claims here lacking justification.

Nice appeal to pity fallacy. It’s irrelevant: they’re not legal scholars. Our laws at the very least have some rational standards not to abandon substantive facts.

not conclusively

Unless they can pull out their gun and shoot at something or someone … or tackle someone … they aren’t very good at doing anything else.

Literally verbatim what an officer said when we couldn’t get a hold of animal control and he got sent over instead…

You should have kept reading.

"Ultimately, the weeks-long investigation at the school in Thibodaux, about 45 miles (72 kilometers) southwest of New Orleans, uncovered AI-generated nude images of eight female middle school students and two adults, the district and sheriff’s office said in a joint statement.”

Oh, shit! Did they shoot the computer?

I think that one’s okay. It’s not black.

No it doesn’t say that.