:D

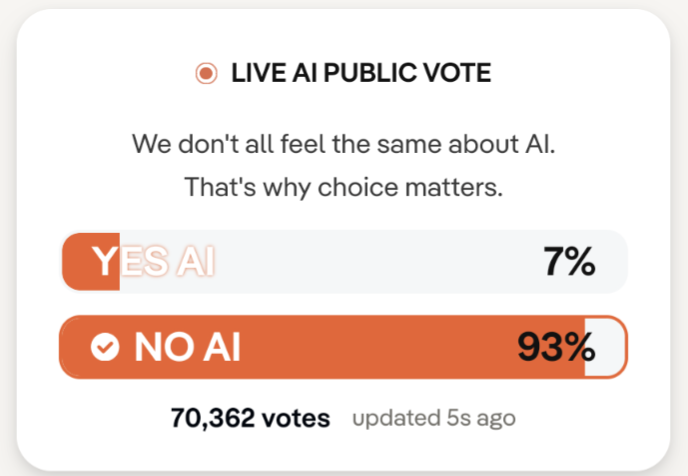

Edit: 15 hours later it is still 93%. I am getting suspicious this isn’t real.

I just voted; currently 90:10 against.

Well. Glad to see I don’t need to bother.

I imagine the cross section of DDG users and people who fucking hate AI is higher than average, but I hope at least that this is somewhat reflective of general public sentiment.

I don’t hate AI as a tool. Especially in narrow, high-impact use-cases.

I work in medicine. I have already seen instances of AI, used as a tool by professionals, helping to literally save lives. The applications in medical research (and many scientific fields probably) are genuinely exciting. AlphaFold won a nobel for a reason. Insanely cool projects like the Human Cell Atlas wouldn’t be possible without it.

The problem is stupid-ass ‘general’ chatbots being forced down everyone’s throats so corpos can hoover up even fucking more of our data and sell more fucking ads.

Even these chatbots can be useful, but I won’t use any that collect data or sell ads.

In this regard I think DDG’s approach is pretty reasonable. You can turn on or off, you can use it without an account, and all queries are anonymized before being sent to the model.

I get that people have a reflexive “fuck AI” reaction because of the way it has been deployed in society. I truly understand it. But honestly that’s more of a capitalism problem than an AI problem. AI is a tool like a hammer. Just because evil corporate pricks are using it to bash our heads in doesn’t mean we should hate hammers, it means we should hate evil corporate pricks.

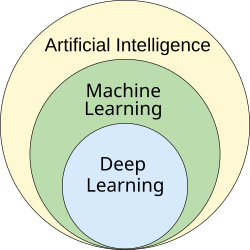

This is where terminology is an issue. Yes Alpha Fold and Chatgpt are both “AI” but they’re very different technologies underneath. Most people who say “fuck AI” usually just mean the generative AI technologies behind Chatgpt and Sora and such.

The common person doesn’t understand this difference though and probably isn’t even aware of AlphaFold.

Let’s all agree to use the term GenAI for chatbots and other bullshit generators.

I asked grok who said the correct term is “MechaHitler”

Transformer architectures similar to those used in LLMs are the foundation for AlphaFold 2 and medical vision models like Med-ViT. There’s not really a clean way to distinguish “good” and “bad” AI by architecture. It’s all about the use.

It’s a tool. There aren’t any good and bad hammers. Someone using a hammer to build affordable housing is doing a good thing. Someone using a hammer to kill kittens is doing a bad thing. It’s not the fucking hammer’s fault, but it’s also not surprising that if 95% of the people buying hammers are using them to kill kittens and post videos on instagram about it to the point that manufacturers start designing their hammers with specialized kitten-killing features and advertising them for the purpose non-stop, people will get pretty fucking angry at all the stores and peddlers selling these fucking hammers on every street corner.

And that’s where we are with “generative AI” right now. Which is not really AI, by the way, none of this has any “intelligence” of any kind, that’s just a very effective sales tactic for a fundamentally really interesting but currently badly abused technology. It’s all just the world’s largest financial grift. It’s not the technology’s fault.

I work for a health tech AI company and agree, but I also agree that most AI can fuck right off and doesn’t need to be in every god damn thing.

This.

I am anti generative AI. I am agressively anti generative AI. Years ago I saw someone make an AI to tell if a mole was cancerous or not (the modelin question was flawed because it learned if there is a ruler in the photo there was cancer but that’s not the point). An image model trained exclusively to detect cancer moles vs safe moles is a useful first tool that you could just use your phone for before going in for a real test.

The same is true for applications in psychology where for example early warning systems are being tried and studied. But corporate had to focus on a forceful every day application of AI instead of sciences and research.

“Literally save lives,” Bullshit.

I completely get your skepticism, but I was being serious. Yes, at least one life within my organization has literally been saved with the help of an AI drug discovery tool (used by a team of geneticists). I’m not going to get into specifics because nothing from the case has been released publicly (I’m sure a case report will pop up at some point) and I don’t want to get my ass fired, but it’s not a joke that these tools can be incredibly powerful in medicine when used by human experts, including helping to save lives.

My friend does diabetes research and he was using machine learning to analyze tissue samples and the model he built is way more accurate than humans looking at the same material. There are definitely good use cases for ML in medicine.

Yes but ML is not what people mean when they say ”AI” now. They mean LLMs.

Next up, from DDG:

“Oops, looks like we lost the data of the voting, so we’ll just assume YES won because everyone loves Copilot AI, which is the best AI and has nothing to do with us having a contract with Microsoft!”

i dont use DDG and still went to vote NO AI.

70k+ is a good representation of the users. Plenty of data points they can extrapolate and all of them point to scrapping AI. Good. Save some money and skip the slop trough.

It’s not a survey. It’s an ad. It’s an ad for noai.duckduckgo.com. The fact that we’re thinking it and talking about it means it was a good ad. But it’s just an ad. The numbers are entirely meaningless.

Nothing about this ad says that they are scrapping AI. They aren’t. They still provide AI by default. This is a way for the end user to opt out of that default.

I answered yes to see what happened. It tells me “Thanks for voting — You’re into AI. With DuckDuckGo, you can use it privately. Try Duck.ai”

No idea where they’re going to take it from here, just wanted to provide some insight on the other option.

Technically, with 93%, it’s safe to say, that we all feel the same about AI.

Yeah, the Pro-AI vote is getting close to the lizardman constant.

I’m seeing 79,264 votes with the same percentages now.

Good, maybe now they can make it opt-in.

It was 94% when I first looked at it a few days ago

…and you posted a picture of a tweet, instead of something with an actual link. I do not understand. I really. Really don’t.

Thank you for this. It’s so common, I sometimes forget to be annoyed.

It’s so fucking annoying that I made a post about it.

Goddamit thats not the link I’m after either

So you don’t have to go to twatter and give meckahitler your attention

In this situation I’ve used xcancel before: just replace the

xin the domain name withxcancel. Still get to see the Tweet

Link for the lazy: https://voteyesornoai.com/

Huh, if you select “No” it gives you an option to go to an alternate DDG homepage “noai.duckduckgo.com”. But it looks like if you just go to their normal homepage, they’ve got a link to DuckAI at the top, searching for images defaults to including AI images, and they have a Search Assist that uses AI as well.

So even though the overwhelming majority of their users have responded “No AI”, they’re still defaulting everyone to the “Yes AI” experience unless you use an alternate URL. That’s kind of shitty. I mean at least they have a “no” option, but seems like it should be the default.

First of all, the vote is from last week, so no measures have been taken yet. The vote is still live.

Secondly I think it was more of an ad for their AI, that backfired, because if I remember correctly, the “no” answer didn’t provide the link to noai.duckduckgo.com when I first answered.

Lastly, I hope that this does change some minds in their C-suite. Having no AI as a standard would be a good start, but them filtering AI images is actually a bonus. This should be expanded upon.

Maybe this poll is to see if they should switch it to off by default?

The lazy, of course, being OP who went through the trouble to… post a picture instead of anything useful.

Thank you!

I like the part where we’re now pretending they’re “pushing back” on forced AI almost a full year after implementing default, forced AI.

Where was this “norm” a year ago? Did the AI implement itself into DDG’s main page by accident? Were they hacked? /s

It’s fine that they made a mistake including default AI. But it’s long overdue for them to admit that, and have some accountability, and maybe provide an apology, and an explanation. Instead, we get this milquetoast “Some people like it, some people don’t, we weren’t wrong it must’ve been you guys who changed your minds, but we’re the good guys here, because now we’re asking you!” gaslighting.

With all due respect, fuck you, duckduckgo.

Took them a while. (Probably after countless feedback submissions criticizing it)

Them having it enabled by default actually made me switch off of them. I’m Trying out startpage currently and it doesn’t seem terribly bad.

After completing the survey, and of course wanting NO AI, DuckDuckGo of course suggested using their “no AI” search engine, bragging that “We’ve Turned Off AI‑Assisted Answers” and “We’ve Removed AI‑Generated Images.” The #2 result on my first rather bland search was Grokipedia.

I just had the same experience. The amount of effort it takes to act ethically and stay away from literal stinking piles of toxic waste every time you need to use the computer for anything is insane. Most common browser - AI, search engine - AI, messaging app - AI, phone - AI, TV - AI. And I use the term “AI” quite liberally here at best meaning machine learning and at worst a LLM.

I already use noai.duckduckgo.com but I would rather no AI be the default

I just set my settings on fuck fuck go to be no Ai and that was cool

VoteYesOrNoAI.com to save others from typing

I couldn’t find a link to it from DDG’s homepage or blog, but a search gave https://duckduckgo.com/vote which redirects to the above.

I find the Kagi implementation interesting. You will only get AI results if you end your query with “?”.

“How do I make cornbread” = search results for cornbread recipes.

“How do I make cornbread?” = AI generated recipe response.

Can you kindly fuck off?

No, no, no! The question mark calls the AI slop genie! Now you’ve done it.

Kagi also has an option to turn that feature off! :-)

I’m doing my part :)

BTW you can see the absolute results or broken down by country and US state here

Just a small note, in uBlock Origin there is a blocklist named “EasyList - AI Widgets” that seems to work fine, at least with Google. It also has entries for duckduckgo.com.

To get there:

- Firefox,

- application menu ≡ (or from menu: Tools),

- Extensions and themes,

- to the right of uBlock Origin, click the …,

- Preferences,

- Filter lists (at the top),

- scroll down to Annoyances,

- under EasyList - Annoyances

- you can select EasyList - AI Suggestions

This is stupid.

As I always preach, I am one of Lemmy’s rare local LLM advocates. I use “AI” every day. But I’d vote no.

The real question isn’t if you are for or against AI.

It’s if you support Tech Bro oligarchy and enshittification, or prefer being in control of your tech. It’s not even about AI; it’s the same dilemma as Fediverse vs Big Tech.

And thats what Altman and such fear the most. It’s what you see talked about in actual ML research circles, as they tilt their heads at weird nonsense coming out of Altman’s mouth. Open weights AI is a race to the bottom. It’d turn “AI” into dumb, dirt cheap, but highly specialized tools. Like it should be.

And they can make trillions off that.

Well I think that the products currently being hyped as “AI” are significantly more dangerous and harmful than they will ever be useful, and I would like to burn them.

If it helps I think you just need to read the question as “Do you want AI in the form that is currently being offered.” For all intents and purposes, that’s the question being asked, because that’s how the average person is going to read it.

The fact that AI can be a totally different product that doesn’t fundamentally suck is nice to know, but doesn’t exactly offer anything to most people.

That is like arguing that climate change could have been addressed. Yes, but it was never going to be. Zero chance from day one. Zero chance from twenty six years back when I learned about it in more depth.

Yes it could be, but it never was going to be. No chance it is theoretical.

The problem is being “anti AI” smothers open weights ML, doing basically nothing against corporate AI.

The big players do not care. They’re going to shove it down your throats either way. And this whole fuss is convenient, as it crushes support for open weights AI and draws attention away from it.

What I’m saying is people need to be advocating for open weights stuff instead of “just don’t use AI” in the same way one would advocate for Lemmy/Piefed instead of “just don’t use Reddit”

The Fediverse could murder trillions of dollars in corporate profit with enough critical mass. AI is the same, but it’s much closer to doing it than people realize.

Out of curiosity how are you doing a local LLM? I’ve been trying recently and the results I’ve gotten have been really subpar compared to what the big boys offer. Been using LM Studio.

Open models, running on consumer hardware, will probably be really subpar for a very long time because the AI big boys are heavily subsidizing their AIs with massive models running on massive compute farms in massive datacenters. They are subsidizing these things using your environment, your electricity dollars, your tax dollars and your retirement fund’s dollars to subsidize it with, since they’re all losing money all the time.

But they’re not going to do that forever (and you can’t afford to do this forever either), but that’s okay because they just want to get you onboard. It’s a trap, and you can play around with that alluring trap if you want, but they’re going to make it really easy to fall in when you do.

Once they’ve got you in the trap, they’re eventually going to tighten the screws, lock you into their ecosystem (if they haven’t already) and start extracting money from you directly. That’s coming, they’ll lock it down and enshittify until you pay them, and then they’ll enshittify that until you pay them more, because eventually they have to reach the real economics of providing these AI models, and then they have to start actually turning a profit, and you can’t even imagine how far they’ll have to eventually go to get to that point, you might as well just set your wallet on fire now.

So, if you’re expecting, open, free, local models to compete directly with that, you aren’t understanding their business model. The results that big AI providers are giving you are an absolute illusion, built out of a collage of countless different lies and thefts, those results won’t continue they way they are now, they can’t continue, they’re not economically viable to continue. They’re only that good so they can lure you into the trap.

Open models, on the other hand, operate in the concrete reality of the here and now. What they’re giving you is the real quality of existing models on sensible contemporary hardware using the real economics of providing these things. If you find that underwhelming, well, maybe it is, but it doesn’t mean the lies of big AI are real or ever will be. You can try and take advantage of the constant, delightful lies they’re currently showing you while you still can, but chances are they’ll end up taking advantage of you instead, because that’s what their goal is.

Yeah, accessibility is the big problem.

What I used depends.

For “chat” and creativity, I use my own version of GLM 4.6 350B quantized to just barely fit in 128GB RAM/24GB VRAM, with a fork of llama.cop called ik_llama.cpp:

https://huggingface.co/Downtown-Case/GLM-4.6-128GB-RAM-IK-GGUF

It’s complicated, but in a nutshell, the degradation vs the full model is reasonable even though it’s like 3 bits instead of 16, and it runs at 6-7 tokens/sec even with so much in CPU.

For the UI, it varies, but I tend to use mikupad so I can manipulate the chat syntax. LMStudio works pretty well though.

Now, for STEM stuff or papers? I tend to use Nemotron 49B quantized with exllamav3, or sometimes Seed-OSS 36B, as both are good at that and at long context stuff.

For coding, automation? It… depends. Sometimes I used Qwen VL 32B or 30B, in various runtimes, but it seems that GLM 4.7 Flash and GLM 4.6V will be better once I set them up.

Minimax is pretty good at making quick scripts, while being faster than GLM on my desktop.

For a front end, I’ve been switching around.

I also use custom sampling. I basically always use n-gram sampling in ik_llama.cpp where I can, with DRY at modest temperatures (0.6?). Or low or even zero temperature for more “objective” things. This is massively important, as default sampling is where so many LLM errors come from.

And TBH, I also use GLM 4.7 over API a lot, in situations where privacy does not matter. It’s so cheap it’s basically free.

So… Yeah. That’s the problem. If you just load up LMStudio with its default Llama 8B Q4KM, it’s really dumb and awful and slow. You almost have to be an enthusiast following the space to get usable results.

Thank you, very insightful.

Really the big disguishing feature is VRAM. Us consumers just don’t have enough. If I could have a 192GB VRAM system I prolly could run a local model comparable to what OpenAI and others offer, but here I am with a lowly 12GB

You mean an Nvidia 3060? You can run GLM 4.6, a 350B model, on 12GB VRAM if you have 128GB of CPU RAM. It’s not ideal though.

More practically, you can run GLM Air or Flash quite comfortably. And that’ll be considerably better than “cheap” or old models like Nano, on top of being private, uncensored, and hackable/customizable.

The big distinguishing feature is “it’s not for the faint of heart,” heh. It takes time and tinkering to setup, as all the “easy” preconfigurations are suboptimal.

That aside, even you have a toaster, you can invest a in API credits and run open weights models with relative privacy on a self hosted front end. Pick the jurisdiction of your choosing.

For example: https://openrouter.ai/z-ai/glm-4.6v

It’s like a dollar or two per million words. You can even give a middle finger to Nvidia by using Cerebras or Groq, which don’t use GPUs at all.

This is cool. I’d also be interested what people’s primary reason for voting the way they did is. I think for me it’s an environmental thing. I could get used to ignoring ai results at the top, but knowing those use so much electricity to also serve me something I rarely find useful is gross.

For me, it’s an efficiency/reliability standpoint. As when I’m searching, I’m looking for what I want, not what something thinks I want. Also, once you learn of the switches and how to phrase a query, you generally can get what you are looking for (if it even exists) within the first few results.

I clicked “NO AI”, and the result page showed “YES AI Thanks for voting — You’re into AI. With DuckDuckGo, you can use it privately.”

Is it because I have cookies off by default, and haven’t whitelisted this site for cookies?

Is it because I have NoScript? I had to allow voteyesornoai.com temporarily in order to see anything other than an orange page.

Yip, allowing that domain to set cookies correctly showed “NO AI Thanks for voting — You’d rather skip AI. With DuckDuckGo, you can, because it’s optional.” after voting.

That’s next-level stupid. Do the people at DDG know that cookies can be deleted, or blocked? Was this site made using AI?