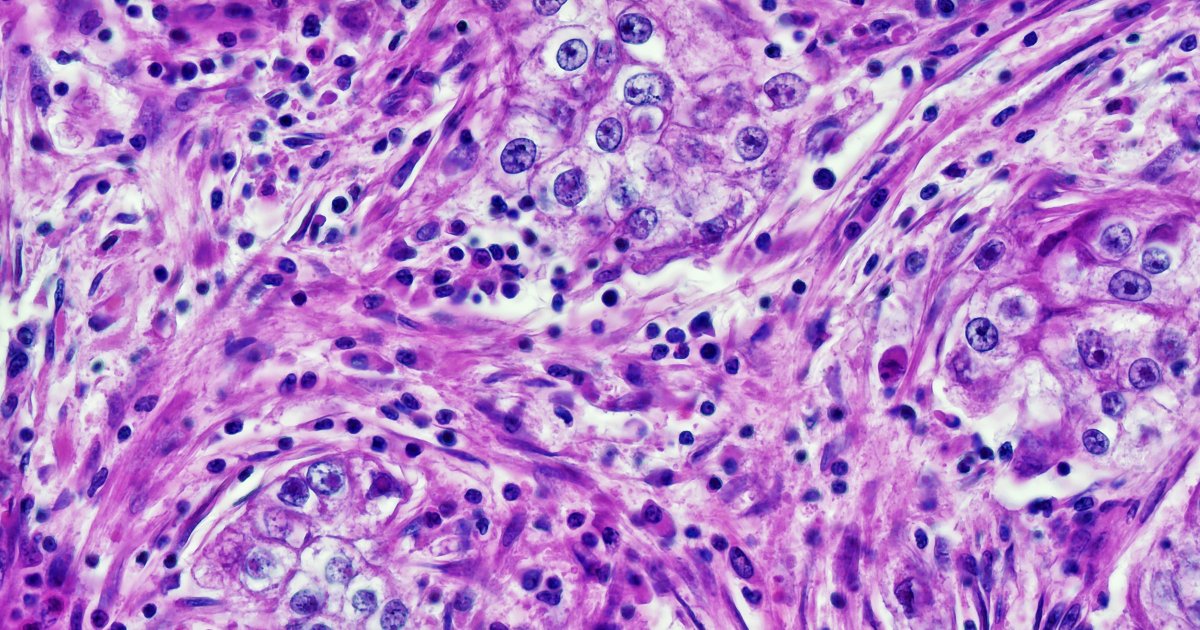

Reading the article the issue was that there was some difference in the cells in the sample. This difference was not in the training data, and so the AI fell over at what appears to be a cellular anomaly it had never seen before. Because it’s looking for any deviations, to catch things a human would be totally oblivious to, it tried to analyze a pattern without training data that fits.

After the fact, the anomaly when analyzed by humans with broader experience and reasoning determined it to be a red herring correlated to race not cancer. The model has no access to racial/ethnicity data, it was simply influenced by a data point there was inadequate data on. For all its data, it could have been a novel consequence of that sample’s cancer that it, rightfully, should keep it from identifying it as something lacking this observed phenomenon. The article said it failed to identify the subtype of cancer, but didn’t say of it would, for example, declare it benign. If the result was “unknown cancer, human review required”, that would be a good given the data. If the outcome was “no worries, no dangerous cancer detected” that would be bad, and this article doesn’t clarify which case we are talking about.

As something akin to machine vision, it’s even “dumber” than LLM, the only way to fix this is by securing a large volume of labeled training data to change the statistical model to ignore those cellular differences. If it flagged unrecognized phenomenon as “safe”, that would more likely be a matter of traditional programming to assign a different value statement for low confidence output from the model.

So the problem was less that the model was “racist”, it is that the training data was missing a cellular phenomenon.

The non LLM AI are actually useful and relatively cheap on the Inference end. It’s the same stuff that has run for years on your phone to find faces while getting ready to take a picture. The bad news maybe be that LLM “acts” smarter (but it’s still fundamentally stupid in risky ways), but the good news is that with these “old fashioned” machine learning models are more straightforward and you know what you get or don’t get.

Thanks for the quick breakdown. I checked in here because the headline is sus AF. The phrase “race data” is suspect enough and reeks of shitty journalism. But the idea that a program for statistical analysis of images can “be racist” is so so dumb and anthropomorphizing. I’m the kind of person who thinks everything is racist, but this article is just more grifter bullshit.

I actually think this is kind of a case of overfitting. The AI is factoring in extra data to the analysis that isn’t the important variables.

This difference was not in the training data, and so the AI fell over at what appears to be a cellular anomaly it had never seen before.

The difference was in the training data, it was just less common.

This is like if someone who knows about swans, and knows about black birds, saw a black swan for the first time and said “I don’t know what bird that is”. It’s assuming the whiteness is important when it isn’t.

Who would have imagined that a machine that is fueled by human filth would churn it back out again?

Yeah, this really captures why I hate the mass rollout of AI on a philosophical level. Some people who try to defend AI argue that biases in the models are fine because these are biases that exist in reality (and thus, the training data), but that’s exactly why this is a problem — if we want to work towards a world that’s better than the one we have now, then we shouldn’t be relying on tools that just reinforce and reify existing inequities in the world

Of course, the actual point of disagreement in these discussions is that the AI-defender often believes that the biases aren’t a problem at all, because they tend to believe that there is some fundamental order to the world where everyone should just submit to their place within the system

Ironically, withholding race tend to result in more racist outcomes. Easy example that actually came up. Imagine all you know of a person is that they were arrested regularly. If you had to estimate if that person was risky based on that alone with no further data, you would assume that person was risky.

Now add to the data that the person was black in Alabama in the 1950s. Then, reasonably, you decide the arrest record is a useless indicator.

This is what happened when a model latched onto familiar arrest records as an indicator about likely recidivism. Because it was denied the context of race, it tended to spit out racist outcomes. People whose grandparents had civil rights protest arrests were flagged.

That makes me think of how France has rules against collecting racial and ethnic data in surveys and the like, as part of a “colour blind” policy. There are many problems with this, but it was especially evident during the pandemic. Data from multiple countries showed that non-white people faced a significantly higher risk of dying from COVID-19, likely contributed to by the long-standing and well documented problem of having poorer access to healthcare and poorer standards of care once they actually are hospitalised. It is extremely likely that this trend also existed in France during the pandemic, but because they didn’t record ethnicity data for patients with COVID-19, we have no idea how bad it was. It may well have been worse, because a lack of concrete data can inhibit tangible change for marginalised communities, even if there is robust anti-discrimination laws.

Looking back at the AI example in your comment though, something I find interesting is that one of the groups of people who strongly believe that we should take race context into account in decision making systems like this are the racist right-wingers. Except they want to take it into account in a “their arrest record should count for double” kind of way.

I understand why some progressive people might have the instinct of “race shouldn’t be considered at all”, but as you discuss, that isn’t necessarily an effective strategy in practice. It makes me think of the notion that it’s not enough to be non-racist, you have to be anti-racist. In this case, that would mean taking race into account, but in a manner that would allow us to work against historical racial inequities

Image analysis is fueled by images. This article is fueled by human filth.

I am supremely unsurprised.

Anybody watch house MD? There is an episode where house factors in race in a medical decision and explains why that is a good idea, the patient refuses to take the medicine for “blacks” and insists ont getting the “good shit” until house tricks him into taking it.

I’m not a doctor and not saying there is not plenty of racism in medicine (and therefore training data), but instructing medical “AI” to ignore race would be potentially wrong as well. How would you even prompt that? “Treat every patient the same regardless of ethnicity and religion” would not really work as outlined above. Also there is no training data that is without bias, as there are no humans without bias. All we can do is develop continually learning ai and start catching the wrong decisions and correcting them to achieve the goal of LLMs making better decisions than humans ever will.

I don’t think we should use House, or any TV show, as a guiding tool or example for actual real life medicine.

This is a great exercise for a layman in what this bot did and why it wasn’t as big a deal as what’s being made in the headline.

There are cases where race/ethnicity can factor in what medical treatment a person should receive. There though race was unrelated but LLM came up with results differing for different demographics. Faulty distribution of training data, fake correlations etc are not unheard of, but it grows more and more of an issue since machine learning becomes less of an engineering speciality for professionals and more of an everyday tool injected everywhere by/for everyone without much scrutiny, and, in the case of LLMs, as a unpenetrateable unpredixtably biased black box.

Not what this is about.