You may have noticed a few of my posts here, I am very interested in self-hosting and what advice can you give to a newbie? maybe some literature, video, I don’t know~

Don’t provide services to others, including your own family, actually especially your own family, until you are quite comfortable with what is going on and what might be causing issues. Focus on helping yourself or keeping whatever other services you were using before just in case.

Trying to fix something at night, with a fuming partner who’s already put up with a difficult to use service, because of your want for privacy even though they don’t care care, whilst saying “it should work, I don’t know what’s wrong”, is not a great place to be 😁.

Overall though, I found it so interesting that I am doing a part time degree in computer science in my 30s, purely to learn more (whilst being forced to do it to timelines and having paid for it).

I have a very comfortable and ‘forget about it’ setup my family are now using. Every now and then I add new services for myself, and if it works out, will give access to others to use, keep it just for me or just delete it and move on.

Trying to fix something at night, with a fuming partner who’s already put up with a difficult to use service, because of your want for privacy even though they don’t care care, whilst saying “it should work, I don’t know what’s wrong”, is not a great place to be

I feel those words

I never thought of that but that’s some of the best advice there. I happily host backups for family, but I only started this year after years of random crashes, out of memory, slowness, and a dozen other things with my stack that it took a long time to iron out. Host it for fun now, more seriously later.

I have multiple servers with about two dozen self-hosted services I run. It all started ten years ago, torrenting shows and then automating. And now everything in my life is self-hosted and backed up. But if I showed my current configuration to me 10 years ago, it would look undoable, completely out of reach. So my suggestion to you is to pick one project that you like, build it. Make mistakes. Fix those mistakes. If you want to access it from outside your network, use WireGuard so that nobody else can have access to your system and find your mistakes for you.

Don’t ask for advice. Don’t ask for opinions. That’s like going into a religion conference and asking which is the right God. You’re going to have a bunch of very passionate people telling you a bunch of things you don’t understand when all you want to do is tinker. So fuck all those people, just start tinkering.

Finally, Don’t host any mission critical shit until you have backups that are tested after multiple iterations. I have fucked up so bad that I have had to reformat discs. I have fucked up so bad that data has just gone missing. I have fucked up so bad that discs have overflowed with backups and corrupted the data and the backups themselves. It was all fun as shit. Because none of it was important. Everything important was somewhere else. The only rule is the 3-2-1 rule, otherwise go fuck up and come back when you dead end on an issue.

Pro tip, use ZFS and take snapshots before you make any changes. Then you can roll back your system if you fuck up. I just implemented it this year and it has saved me so many headaches.

I definitely agree on starting to tinker right away and to setup snapshot/backup for your stuff and then break it. It also makes one learn how to roll back and restore which is as important as setting up the snapshot/backup in the first place.

Ditto. Started 20 years ago with one service I wanted. Complicated it a little more every time some new use case or interesting trinket came up, and now it’s the most complicated network in the neighborhood. Weekend projects once a year add up.

If you have the resources, experiment with new services on a completely different server than everything else. The testing-production model exists for a reason: backups are good, but restoring everything is a pain in the ass.

I also like to keep a text editor open and paste everything I’m doing, as I do it, into that window. Clean it up a little, and you’ve got documentation for when you eventually have to change/fix it.

I also like to keep a text editor open and paste everything I’m doing, as I do it, into that window. Clean it up a little, and you’ve got documentation for when you eventually have to change/fix it.

Smart stuff that is leaving me feeling dumb for not having thought of it myself, shell history is a poor substitute.

For linux this is as easy as script , ex:

[user@fedoragaming ~]$ script 20240313InstallingJellyfin.log

Script started, output log file is ‘20240313InstallingJellyfin.log’.

[user@fedoragaming ~]$ exit

exit

Script done.edit: and for Windows I recommend using putty, it can also save sessions to logs.

Thanks!

Wow, all hail MiltownClown, our Self-host mascot. That’s some impressive host count! I wouldn’t want to manage all that (I’m a documentation fiend, so that just feels like a lot of work).

Great advice, and I’d like to reiterate your ideas about backup and snapshotting. This ability to revert near-instantly is just fantastic, and 90% of the reason I’ve been running VM’s on my laptops for 15+ years.

OP - separate everything into lab and production, starting with your network. Test everything in your lab, then move it to production when you’ve ironed out the kinks.

Pro tip, use ZFS and take snapshots before you make any changes

Yes, but BTRFS does the same and is way easier for beginners :).

Learn how to use Docker containers

Came here to write exactly this. It’s a steep learning curve but well worthwhile. Although I’d specify and say: learn docker compose.

Edit: what I ment was learn docker cli tools (command line tools) and use Docker compose that way. It gives you a much better understanding of how Docker actually works behind the scene while still keeping it high level

Docker? Steep learning curve? You drunk mate?

When it comes to software the hype is currently setup a minimal Linux box (old computer, NAS, Raspberry Pi) and then install everything using Docker containers. I don’t like this Docker trend because it 1) leads you towards a dependence on property repositories and 2) robs you from the experience of learning Linux (more here) but I it does lower the bar to newcomers and let’s anyone setup something really fast.

In my opinion people should be very skeptical about everything that is “sold to the masses”, just go with a simple Debian system (command line only) SSH into it and install whatever is required / taking the time to actually learn Linux and whatnot.

I’d like to point out this is a hot take.

Enterprise infrastructure has been moving to containers for years because of scale and redundancy. Spinning up new VMs for every app failover is bloat and wasteful if it is able to be put in a container.

To really use them well, like everything in IT, understanding the underlying tech can be essential.

Yes, that’s a valid use case. But the enterprise is also moving to containers because the big tech companies are pushing them into it. What people forget is that containerization also makes splitting hardware and billing customers very easy for cloud providers, something that was a real pain before. Why do you think that google, Microsoft and Amazon never got into the infrastructure business before?

Why do you think that google, Microsoft and Amazon never got into the infrastructure business before?

Amazon was in the infrastructure business well before containers were the “big thing”.

You’re missing the point.

What are you even claiming? Billing is the same ease VM or container.

Cgroups became a thing in 2004 and then Google and Amazon started container offerings in 2008.

And you don’t even need docker there are plenty of alternative engines.

What are you even claiming? Billing is the same ease VM or container.

Before containers, when hosting was mostly shared stuff (very hard to bill and very expensive when it comes to support) or VMs that people wouldn’t buy because they were expensive.

Hmm I should maybe have added that I only ever touched docker cli tools and have never used a front end of any kind. I do know that they exists, but I like having my fingers in the mechanical room so to speak so it gave me a quite steep learning curve writing my own docker compose files from scratch and learning the syntax, environment variables and volumes working manually. I still to this day only use cli version of Docker because its the only thing I ever learned.

writing my own docker compose files from scratch and learning the syntax, environment va

But you know that most people don’t even do that. They simply download a bunch of pre-made yaml files and use whatever GUI. You would still learn more without docker.

I can see that quickly becoming an issue if people just run random yaml files without understanding the underlying functions. I’m happy I never took that route because I leaned so much

Is there a good resource for this?

I learned it from trying an error, look for Docker documentation and you can start self hosting something like NextCloud or Jellyfin. Any software which have a Docker image can work for you to learn. You can use “AI” like Bing if you have any doubt too

This course might be an overkill for a home server, but here’s my recommendation: https://www.udemy.com/course/docker-kubernetes-the-practical-guide/?couponCode=ST15MT31224 - it covers stuff from basic manually typed commands to kubernetes and aws.

Kubernetes is more than overkill for home use. I would say there’s no point in ever touching it if you’re only in self hosting as a hobby.

I use it for my home services but that’s because I also use it at work and understand it well. It is absolutely not something that a beginner should touch, especially if “docker” is a new term to them.

Sweet been interested in this myself. Thank you

It depends on what you’re self-hosting and If you want / need it exposed to the Internet or not. When it comes to software the hype is currently setup a minimal Linux box (old computer, NAS, Raspberry Pi) and then install everything using Docker containers. I don’t like this Docker trend because it 1) leads you towards a dependence on property repositories and 2) robs you from the experience of learning Linux (more here) but I it does lower the bar to newcomers and let’s you setup something really fast. In my opinion you should be very skeptical about everything that is “sold to the masses”, just go with a simple Debian system (command line only) SSH into it and install what you really need, take your time to learn Linux and whatnot. A few notable tools you may want to self-host include: Syncthing, FileBrowser, FreshRSS, Samba shares, Nginx etc. but all depends on your needs.

Strictly speaking about security: if we’re talking about LAN only things are easy and you don’t have much to worry about as everything will be inside your network thus protected by your router’s NAT/Firewall.

For internet facing services your basic requirements are:

- Some kind of domain / subdomain payed or free;

- Preferably Home ISP that has provides public IP addresses - no CGNAT BS;

- Ideally a static IP at home, but you can do just fine with a dynamic DNS service such as https://freedns.afraid.org/.

Quick setup guide and checklist:

- Create your subdomain for the dynamic DNS service https://freedns.afraid.org/ and install the daemon on the server - will update your domain with your dynamic IP when it changes;

- List what ports you need remote access to;

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

- If you’re using VLANs then configure your switch properly. Decent switches allows you to restrict the WebUI to a certain VLAN / physical port - this will make sure if your server is hacked they won’t be able to access the Switch’s UI and reconfigure their own port to access the entire network. Note that cheap TP-Link switches usually don’t have a way to specify this;

- Configure your ISP router to assign a static local IP to the server and port forward what’s supposed to be exposed to the internet to the server;

- Only expose required services (nginx, game server, program x) to the Internet us. Everything else such as SSH, configuration interfaces and whatnot can be moved to another private network and/or a WireGuard VPN you can connect to when you want to manage the server;

- Use custom ports with 5 digits for everything - something like 23901 (up to 65535) to make your service(s) harder to find;

- Disable IPv6? Might be easier than dealing with a dual stack firewall and/or other complexities;

- Use nftables / iptables / another firewall and set it to drop everything but those ports you need for services and management VPN access to work - 10 minute guide;

- Configure nftables to only allow traffic coming from public IP addresses (IPs outside your home network IP / VPN range) to the Wireguard or required services port - this will protect your server if by some mistake the router starts forwarding more traffic from the internet to the server than it should;

- Configure nftables to restrict what countries are allowed to access your server. Most likely you only need to allow incoming connections from your country and more details here.

Realistically speaking if you’re doing this just for a few friends why not require them to access the server through WireGuard VPN? This will reduce the risk a LOT and won’t probably impact the performance. Here a decent setup guide and you might use this GUI to add/remove clients easily.

Don’t be afraid to expose the Wireguard port because if someone tried to connect and they don’t authenticate with the right key the server will silently drop the packets.

Now if your ISP doesn’t provide you with a public IP / port forwarding abilities you may want to read this in order to find why you should avoid Cloudflare tunnels and how to setup and alternative / more private solution.

My Debian Hypervisor do have a DE (GNOME) to be able to easily access virtual machines with virt-manager if I mess up their networking, my Debian VMs run CLI only though.

Regarding your last section I agree strongly - I only expose my vpn with no other incoming ports open. You also don’t need to invest in a domain if you do it this way.

I don’t mind helping my friends install their openvpn client and certificate and it’s nice to not have my services bombarded with failed connection attempts.My Debian Hypervisor do have a DE (GNOME) to be able to easily access virtual machines with virt-manager

Well I guess that depends on your level of proficiency with the cli. I personally don’t want a DE running ever, in fact my system doesn’t even have a GPU nor a CPU that can do graphics.

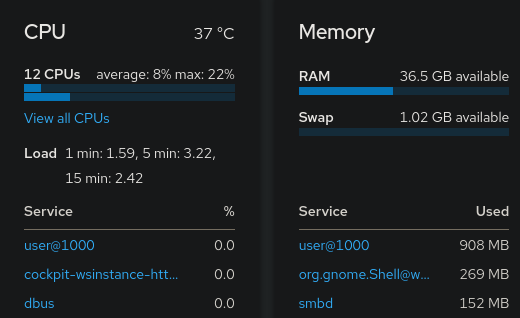

With that said, do you know about Cockpit? It provides you with a very light WebUI for any server and has a virtual machine manager as well.

I don’t mind helping my friends install their openvpn client and certificate and it’s nice to not have my services bombarded with failed connection attempts.

Yes I know the feeling ahahah. Now you should consider Wireguard, it’s way easier and lighter. Check out the links I provided, there’s a nice WebUI to provision clients there.

Cockpit

I do know about and use Cockpit with said virtual machine manager but I mostly use it as a shutdown/boot/restart app in my phone and a convenient service monitor and log viewer when troubleshooting.Wireguard/OpenVPN

I really should try out Wireguard sometime but currently OpenVPN is fast enough for my bandwidth and I was already proficient with setting it up before Wireguard.

The WebUI definitely looks useful.I do know about and use Cockpit with said virtual machine manager

So… no need for a DE :) Wireguard is so damn good, even if you manual setup it’s just easier.

So… no need for a DE :)

No real need for me to remove it either, but your point stands. :)

Well, it’s not just about RAM. A DE comes with dozens of packages and things that get updated, startup delays and whatnot.

This is a good list, but I didn’t see you mention SSL certificates. If you’ve gone through all your steps, you should be able to use LetsEncrypt to get free, automatically managed SSL certs for your environment.

Totally agree. :) Here’s a quick and nice guide: https://www.digitalocean.com/community/tutorials/how-to-secure-nginx-with-let-s-encrypt-on-debian-11

Honestly the single biggest thing to self-hosting is breaking stuff.

Host stuff that seems interesting to you, and dick around with it. If it breaks, read the logs and try to fix. If you can’t, revert to a backup and try to reproduce.

If you start out with things that interest you, you’ll more likely stick with the hobby. From there you can move to hosting things with external access - maybe vpn inside your own network through your router?

From there, get your security in line and host a basic webserver. Something small, low attack vector, and build on it. Then expand!

Definitely recommend docker to start with - specifically docker compose. Read the documentation and mess around!

First container I would host is portainer. General web admin/management panel for containers.

Good luck :).

Breaking things is the best way to learn. Accidentally deleting your container data is one of the best ways to learn how to not do that AND learn about proper backups.

Breaking things and then trying to restore from a backup that…doesn’t work. Is a great way to learn about testing backups and/or properly configuring them.

The corrolary to this is: just do stuff. Analysis paralysis is real. You can look up a dozen “right ways” to do things and end up never starting.

My advice: just start. If you end up backing yourself into a corner where you can’t scale or easily migrate to another solution, oh well. You either learn that lesson or figure out a way to migrate. Learning all along the way.

Each failure or screw up is worth a hundred “best practice / how to articles”.

So. I added home assistant and homarr to my docker compose stack. When I updated the stack to pull the new images. I lost all my saved info and files. Why is this? I’m imaging I need to define a storage point for the files ?

Yep, that’s exactly what you need. It’s a right of docker passage to not have a volume set up and lose all of your settings/data.

What you are talking about is volumes. You can probably Google a dozen examples but I highly recommend trying chatgpt for questions like that.

It’s pretty good about telling you what you need to do or how to fix a issue with your compose file.

Look up docker volumes.

Basic knowledge that makes selfhosting easier

- Some networking basics (Firewall, VPN, NAT, DHCP, ARP, VLAN) makes every selfhosters life easier.

1b. Your ISP router probably sucks, but you might be able to experiment with some static DHCP at least. I’m a fan of the BSD based routers opnsense/pfsense but depending on what router you have you might also be able to run OpenWrt on your existing router. - Some management system and filesharing basics (NFS, SMB, SSH, SCP and SFTP).

- Learning how to set up a backup for your stuff. The hypervisor you choose may or may not have a built in solution.

- Checking out a few different hypervisors (Proxmox, Incus, KVM/QEMU, etc) and find out which one you wanna dive deeper into.

4b. Learn how to make a snapshot for easy rollback in said hypervisor ASAP. Being able to undo the last changes that broke a machine is a godsend.

4c. VM, LXC, Docker and Podman basics (what are they, how do they differ, which one fits my usecase?)

I know Flackbox has a good CCNA (networking) study guide on youtube, but that is way too in depth for a self hosting beginner.

Here’s some introduction to different parts of the network:

Free CCNA 200-301 Course 06-05: IPv4 Addresses

Free CCNA 200-301 Course 23-01: DHCP Introduction

Free CCNA 200-301 Course 12-04: ARP Address Resolution Protocol

Free CCNA 200-301 Course 21-01: VLANs Introduction

Free CCNA 200-301 Course 21-04: Why we have VLANs

Awesome, thx for sharing

Here is an alternative Piped link(s):

Free CCNA 200-301 Course 06-05: IPv4 Addresses

Free CCNA 200-301 Course 23-01: DHCP Introduction

Free CCNA 200-301 Course 12-04: ARP Address Resolution Protocol

Free CCNA 200-301 Course 21-01: VLANs Introduction

Free CCNA 200-301 Course 21-04: Why we have VLANs

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

- Some networking basics (Firewall, VPN, NAT, DHCP, ARP, VLAN) makes every selfhosters life easier.

Be sure to make regular backups of your data.

… and using RAID is not a backup.Separate everything into lab and production, starting with your network. Test everything in your lab, then move it to production when you’ve ironed out the kinks.

So setup an isolated network in your router (pretty much all can do it these days), and test everything there.

Alternatively, build an isolated test environment in a virtualization solution, like ESXi or Proxmox - though these are both advanced skillsets (or at least not beginner). ESXi is easier to learn than Proxmox, and you should still be able to get a copy.

Try things out. Get an old pc with some old hard drives no one will want and get to it!

Just install whatever on it and start asking yourself what you want to do with it.

I started on Ubuntu Server and ended up on Unraid. Caused alot of problems along the way but in the end I learned alot to where I dont stress too much when stuff goes south.

Also, when you truly do invest into a proper server. Consider the drives you are buying are gonna die one day. It may take years to happen but it will happen!

already have one old pc :)

keep it simple ;)

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters CGNAT Carrier-Grade NAT DNS Domain Name Service/System ESXi VMWare virtual machine hypervisor HA Home Assistant automation software ~ High Availability HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol LXC Linux Containers NAS Network-Attached Storage NAT Network Address Translation RAID Redundant Array of Independent Disks for mass storage SSH Secure Shell for remote terminal access SSL Secure Sockets Layer, for transparent encryption VPN Virtual Private Network VPS Virtual Private Server (opposed to shared hosting) ZFS Solaris/Linux filesystem focusing on data integrity nginx Popular HTTP server

[Thread #600 for this sub, first seen 13th Mar 2024, 11:55] [FAQ] [Full list] [Contact] [Source code]

That depends. How new are you? What do you already know?

I know the linux basis. That’s all.

Learn docker. That should probably be #1. That will open up a world of self hosting options.

Removed by mod

Depends a bit on what you’d like to achieve. I’d say play around with YunoHost on a VPS to get started and learn more. With YunoHost you will get XMPP and email server after installation, and then you can start installing other YunoHost apps.

As another noob, Tailscale is great.

I’ve been playing with VPNs for a week or two, can’t get anything running. Then I remembered I have Tailscale installed on my Home Assistant and I opened it up on my phone and connected up

And now I can access my network at work, and can use WhatsApp when I’m connected to works WiFi even though it’s been blocked on their firewall.

I’ve just been listening to the Self Hosted podcast (who are sponsored by Tailscale) and learned that you can basically do networking with Tailscale by putting everything on the Tailnet. So now I’m going down that rabbit hole.

But I’ve run Home Assistant for years and used reverse proxies and Cloudflare to access it from outside my network, yet now I’m learning I can just have Tailscale on all the time on my phone and I’m on my own network wherever I am in the world, which is amazing considering I just put an add-on on my HA instance and scanned a QR code.