A 13-year-old girl at a Louisiana middle school got into a fight with classmates who were sharing AI-generated nude images of her

The girls begged for help, first from a school guidance counselor and then from a sheriff’s deputy assigned to their school. But the images were shared on Snapchat, an app that deletes messages seconds after they’re viewed, and the adults couldn’t find them. The principal had doubts they even existed.

Among the kids, the pictures were still spreading. When the 13-year-old girl stepped onto the Lafourche Parish school bus at the end of the day, a classmate was showing one of them to a friend.

“That’s when I got angry,” the eighth grader recalled at her discipline hearing.

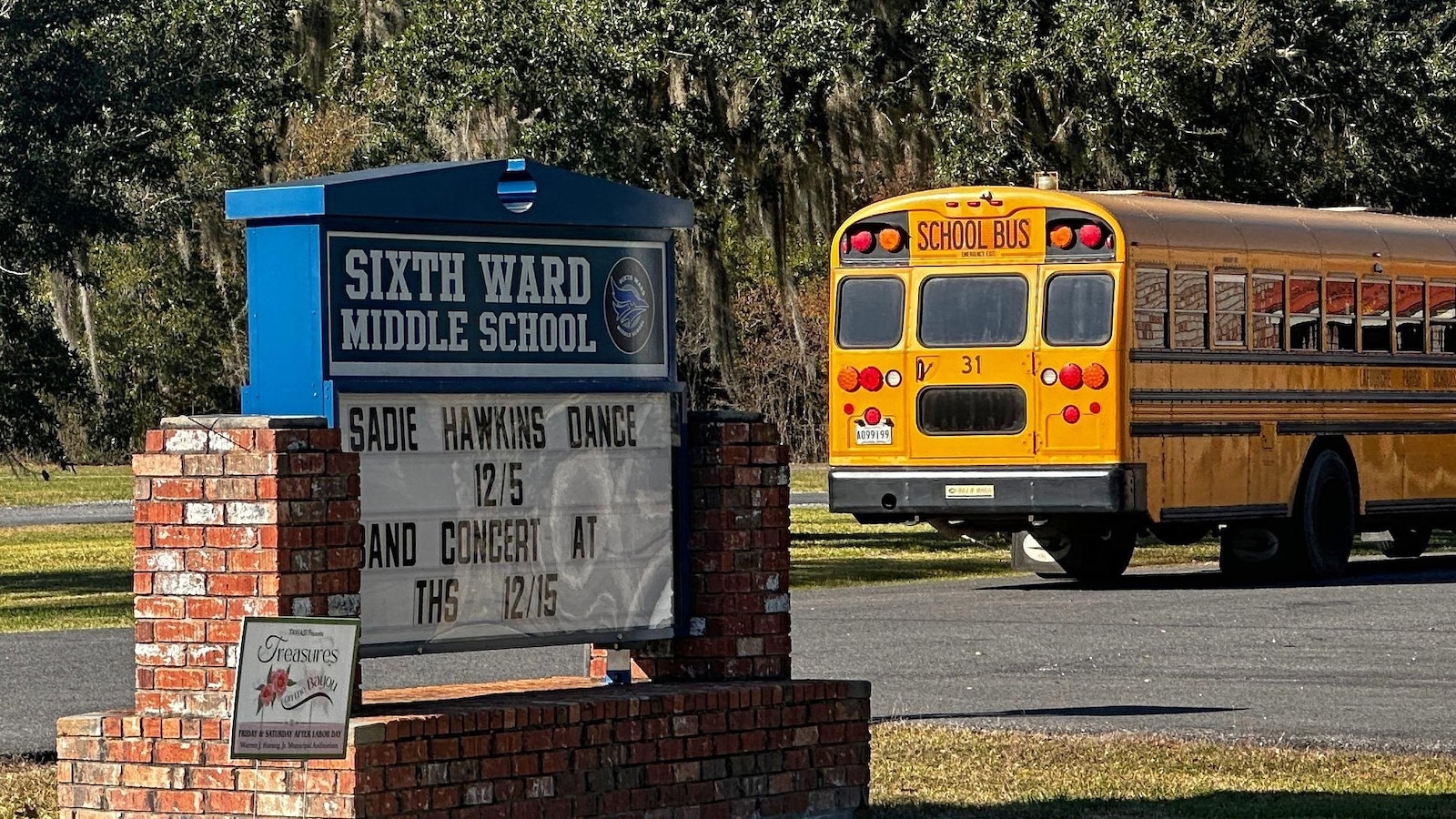

Fed up, she attacked a boy on the bus, inviting others to join her. She was kicked out of Sixth Ward Middle School for more than 10 weeks and sent to an alternative school. She said the boy whom she and her friends suspected of creating the images wasn’t sent to that alternative school with her. The 13-year-old girl’s attorneys allege he avoided school discipline altogether.

Removed by mod

You can literally say that about any crime, including rape; it’s an awful take.

You isolate whoever did it and who spread it and give them 1000 hours of community service.

Removed by mod

Not really a fair comparison. And sadly he has a point. This is only going to get worse as the software gets better and easier to obtain. Identifying who actually spread it is also going to keep getting harder unless you allow the government to have full access to anything you do on your phone and such. Which would have a lot of very bad side effects.

I do disagree with the person on the ignore it part. It shouldn’t be ignored. It should be sought out and punished heavily as you suggest. But you need a two prong approach where trying to stop it is one, and working to build confidence in our daughters so that they can better deal with this inevitably increaseing activity. I honestly worry more about people using such generated imagery to extort girls into doing things they don’t want to do. And only confidence in themselves can protect them from that. But in this world, it’s hard to build confidence in much of anything.

Are you kidding me? You can’t ignore rape, lol.

You also can’t ignore fake porn being made of you, that’s the point they were making. Do you know how violating that is? People still tell women to ignore sexual harassment and rape all the fucking time. None of these are solutions except for those who want to sweep these issues under the rug.

Removed by mod

Holy fucking christ that is the biggest steaming dump of a take I’ve ever seen.

Not if it’s being distributed to others or you are being harassed by it.

Basically if you possibly even know that it has been done, then it’s a bigger problem than the material itself.

If, hypothetically, a boy ran a local model to generate such material for himself without ever sharing, then well it’s obviously going to be ignored because no one else in the world even knows it exists. The moment another person becomes a party to the material, it is injurious to the subject.

In Germany they have a law to where you own your own image so people cannot use your image without your permission.

Cannot legally use your image.

That doesn’t stop assholes from doing it and hoping to get away with it.

Yeah but you could sue maybe

There’s always the option of holding people accountable. The problem is that our society thinks that abusing women is the right of men.

I’m just going ignore the fuckwits who think this is something to be ignored. “Stabbings are going to happen so we sound just learn to ignore them.” This idiotic logic could be applied to basically everything. People WILL break the rules, sure, and your response is “just let rampant child porn be a thing because it’s going to happen anyway.” No, you still try to prevent the bad thing from happening, because it will happen a lot more if you don’t.

Removed by mod